Abstract:

An automated software testing technique is presented which solves the fragile test problem of white-box testing by allowing us to ensure that the component-under-test interacts with its collaborators according to our expectations without having to stipulate our expectations as test code, without having the tests fail each time our expectations change, and without having to go fixing test code each time this happens.

(Useful pre-reading: About these papers)

Summary

In automated software testing it is sometimes necessary to ensure not only that given specific input, the component-under-test produces correct output, (Black-Box Testing,) but also that while doing so, it interacts with its collaborators in certain expected ways. (White-Box Testing.) The prevailing technique for achieving white-box testing (Mock Objects) requires copious amounts of additional code in the tests to describe the interaction that are expected to happen, and fails the tests if the actual interactions deviate from the expected ones.

Unfortunately, the interactions often change due to various reasons, for example applying a bug fix, performing refactoring, or modifying existing code in order to accommodate the addition of new code intended to introduce new functionality; so, tests keep breaking all the time, (the Fragile Test problem,) requiring constant maintenance, which imposes a heavy burden on the Software Development process.

Collaboration Monitoring is a technique for white-box testing where during a test run we record detailed information about the interactions between collaborators, we compare the recording against that of a previous test run, and we visually examine the differences to determine whether the changes observed in the interactions are as expected according to the changes that were made in the code. Thus, no code has to be written to describe in advance how collaborators are expected to interact, and no tests have to be fixed each time the expectations change.

The problem

Most software testing as conventionally practiced all over the world today consists of two parts:

- Result Validation: ascertaining that given specific input, the component-under-test produces specific expected output.

- Collaboration Validation: ensuring that while performing a certain computation, the component-under-test interacts with its collaborators in specific expected ways.

As I argue elsewhere, in the vast majority of cases, Collaboration Validation is ill-advised, because it constitutes white-box testing; however, there are some cases where it is necessary, for example:

- In high-criticality software, which is all about safety, not only the requirements must be met, but also nothing must be left to chance. Thus, the cost of white-box testing is justified, and the goal is in fact to ensure that the component-under-test not only produces correct results, but also that while doing so, it interacts with its collaborators as expected.

- In reactive programming, the component-under-test does not produce output by returning results from function calls; instead, it produces output by forwarding results to collaborators. Thus, even if all we want to do is to ascertain the correctness of the component's output, we have to examine how it interacts with its collaborators, because that is the only way to observe its output.

The prevalent mechanism by which the Software Industry achieves Collaboration Validation today is Mock Objects. As I argue elsewhere, (see michael.gr - If you are using mock objects you are doing it wrong) the use of mocks is generally ill-advised due to various reasons, but with respect to Collaboration Validation in specific, the problem with mocks is that their use is extremely laborious:

- When we write a test for a certain component, it is counter-productive to have to stipulate in code exactly how we expect it to interact with its collaborators.

- When we revise the implementation of a component, the component may now legitimately start interacting with its collaborators in a different way; when this happens, it is counter-productive to have the tests fail, and to have to go fix them so that they stop expecting the old interactions and start expecting the new interactions.

The original promise of automated software testing was to allow us to modify code without the fear of breaking it, but with the use of mocks the slightest modification to the code causes the tests to fail, so the code always looks broken, and the tests always require fixing.

This is particularly problematic in light of the fact that there is nothing about the concept of Collaboration Validation which requires that the interactions between collaborators must be stipulated in advance, nor that the tests must fail each time the interactions change; all that is required is that we must be able to tell whether the interactions between collaborators are as expected or not. Thus, Collaboration Validation does not necessitate the use of mocks; it could conceivably be achieved by some entirely different means.

The Solution

If we want to ensure that given specific input, a component produces expected results, we do of course have to write some test code to exercise the component as a black-box. If we also want to ensure that the component-under-test interacts with its collaborators in specific ways while it is being exercised, this would be white-box testing, so it would be best if it does not have to also be written in code. To achieve this without code, all we need is the ability to somehow capture the interactions so that we can visually examine them and decide whether they are in agreement with our expectations:

- If they are not as expected, then we have to keep working on the production code and/or the black-box testing code.

- If they are as expected, then we are done: we can commit our code, and call it a day, without having to modify any white-box tests!

The trick is to do so in a convenient, iterative, and fail-safe way, meaning that the following must hold true:

- When a change in the code causes a change in the interactions, there should be some kind of indication telling us that the interactions have now changed, and this indication should be so clear that we cannot possibly miss it.

- Each time we modify some code and run the tests, we want to be able to see what has changed in the interactions as a result of only those modifications, so that we do not have to pore through long lists of irrelevant interactions, and so that no information gets lost in the noise.

To achieve this, I use a technique that I call Collaboration Monitoring.

Collaboration Monitoring is based on another testing technique that I call Audit Testing, so it might be a good idea to read the related paper before proceeding: michael.gr - Audit Testing.

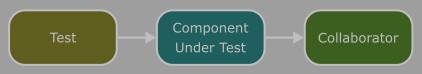

Let us assume that we have a component that we want to test, which invokes interface T as part of its job. In order to test the component, we have to wire it with a collaborator that implements T. For this, we can use either the real collaborator that would be wired in the production environment, or a Fake thereof. Regardless of what we choose, we have a very simple picture which looks like this:

Note that with this setup we can exercise the component-under-test as a black-box, but we cannot yet observe how it interacts with its collaborator.

In order to observe how the component-under-test interacts with its collaborator, we interject between the two of them a new component, called a Collaboration Monitor, which is a decorator of T. The purpose of this Collaboration Monitor is to record into a text file information about each function call that passes through it. The text file is called a Snoop File, and it is a special form of Audit File. (See michael.gr - Audit Testing.)

Thus, we now have the following picture:

The information that the Collaboration Monitor saves for each function call includes:

- The name of the function.

- A serialization of the value of each parameter that was passed to the function.

- A serialization of the return value of the function.

As per Audit Testing, the Snoop File is saved in the source code tree, right next to the source code file of the test that generated it, and gets committed into the Source Code Repository / Version Control System along with the source code. For example, if we have `SuchAndSuchTest.java`, then after running the tests for the first time we will find a `SuchAndSuchTest.snoop` file right next to it. We can examine this file to ensure that the component-under-test interacted with the collaborator exactly as expected.

As we continue developing our system, the modifications that we make to the code will sometimes have no effect on how collaborators interact with each other, and sometimes will cause the collaborators to start interacting differently. Thus, as we continue running our tests while developing our system, we will be observing the following:

- For as long as the collaborations continue in exactly the same way, the contents of the Snoop Files remain unchanged, despite the fact that the files are re-generated on each test run.

- As soon as some collaborations change, the contents of some Snoop Files will change.

As per Audit Testing, we can then leverage our Version Control System and our Integrated Development Environment to take care of the rest of the workflow, as follows:

- When we make a revision in the production code or in the testing code, and as a result of this revision the interactions between the component-under-test and its collaborators are now even slightly different, we will not fail to take notice because our Version Control System will show the corresponding Snoop File as modified and in need of committing.

- By asking our Integrated Development Environment to show us a "diff" between the current snoop file and the unmodified version, we can see precisely what has changed without having to pore through the entire snoop file.

- If the observed interactions are not exactly what we expected them to be according to the revisions we just made, we keep working on our revision.

- When we are confident that the differences in the interactions are exactly as expected according to the changes that we made to the code, we commit our revision, along with the Snoop Files.

What about code review?

As per Audit Testing, the reviewer is able to see both the changes in the code, and the corresponding changes in the Snoop Files, and vouch for them, or not, as the case might be.

Requirements

For Collaboration Monitoring to work, snoop files must be free from non-deterministic noise, and it is best if they are also free from deterministic noise. For more information about these types of noise and what you can do about them, see michael.gr - Audit Testing.

Automation

When using languages like Java and C# which support reflection and intermediate code generation, we do not have to write Collaboration Monitors by hand; we can instead create a facility which will be automatically generating them for us on demand, at runtime. Such a facility can be very easily written with the help of Intertwine (see michael.gr - Intertwine.)

Using Intertwine, we can create a Collaboration Monitor for any interface T. Such a Collaboration Monitor works as follows:

- Contains an Entwiner of T so that it can expose interface T without any hand-written code implementing interface T. The Entwiner delegates to an instance of `AnyCall`, which expresses each invocation in a general-purpose form.

- Contains an implementation of `AnyCall` which serializes all necessary information about the invocation into the Snoop File.

- Contains an untwiner of T, so that it can convert each invocation from `AnyCall` back to an instance of T, without any hand-written code for invoking interface T.

Comparison of Workflows

Here is a step-by-step comparison of the software development process when using mocks, and when using collaboration monitoring.

Workflow using Mock Objects:

- Modify the production code and/or the black-box part of the tests.

- Run the tests.

- If the tests pass:

- Done.

- If the tests fail:

- Troubleshoot why this is happening.

- If either the production code or the black-box part of the tests is wrong:

- Go to step 1.

- If the white-box part of the tests is wrong:

- Modify the white-box part of the tests (the mocking code) to stop expecting the old interactions and start expecting the new interactions.

- Go to step 2.

- Modify the production code and/or the tests.

- Run the tests.

- If the tests pass:

- If the interactions have remained unchanged:

- Done.

- If the interactions have changed:

- Visually inspect the changes.

- If the interactions agree with our expectations:

- Done.

- If the interactions differ from our expectations:

- Go to step 1.

- If the tests fail:

- Go to step 1.

Conclusion

Collaboration Monitoring is an adaptation of Audit Testing which allows the developer to write black-box tests which

only exercise the public interface of the component-under-test, while

remaining confident that the component interacts with its collaborators inside

the black box according to their expectations, without having to write

white-box testing code to stipulate the expectations, and without having to

modify white-box testing code each time the expectations change.

Cover image: "Collaboration Monitoring" by michael.gr based on original work 'monitoring' by Arif Arisandi and 'Gears' by Free Fair & Healthy from the Noun Project.

No comments:

Post a Comment